24. Interpretation and importance of the Bayes Theorem in statistical inference and the concept of prior, posterior probabilities and the likelihood.

Bayes’ theorem is a formula that describes how to update the probabilities of hypotheses when given evidence. It follows simply from the axioms of conditional probability, but can be used to powerfully reason about a wide range of problems involving belief updates. It allows you to update predicted probabilities of an event by incorporating new information.

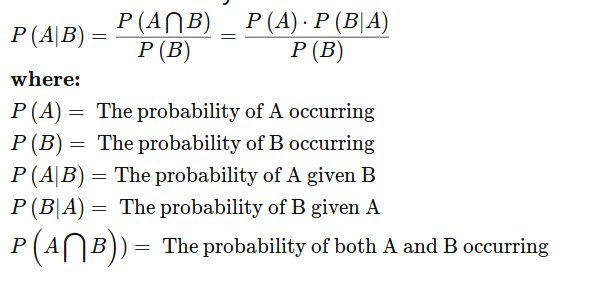

The Formula For Bayes’ Theorem Is

Bayesian inference is a method of statistical inference in which Bayes’ theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called “Bayesian probability”.

In Bayesian statistics, the posterior probability of a random event or an uncertain proposition is the conditional probability that is assigned after the relevant evidence or background is taken into account. Similarly, the posterior probability distribution is the probability distribution of an unknown quantity, treated as a random variable, conditional on the evidence obtained from an experiment or survey. “Posterior”, in this context, means after taking into account the relevant evidence related to the particular case being examined. For instance, there is a (“non-posterior”) probability of a person finding buried treasure if they dig in a random spot, and a posterior probability of finding buried treasure if they dig in a spot where their metal detector rings.

Posterior probability is the probabilty of the parameter O after the observation of the sample X. In other words, it is the probability of O knowning the sample X. P(O|X)

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one’s beliefs about this quantity before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable.

Prior probability is the probability of the parameter O before the observation of the sample X. P(X)

In statistics, the likelihood function (often simply called the likelihood) expresses the probability (or probability density) of a sample of data given a set of model parameter values. The likelihood is typically formulated as a function of the parameters. However, it does not express a probability over the parameter space;[a] it is equal to the joint probability distribution of a random sample, which is a random variable taking values in the sample space, not the parameter space.

Likelyhood probability is the probability of the sample X knowning the observation O. P(X|O)

| P(O | X)=P(X | O)*P(O)/P(X) |

Bayesian approach tries to maximize posterior probabilty, differently from the normal approach which has the aim to maximize the likelyhood probability.